AI Agents for Every Workflow

- Automate any workflow, from customer support to advanced data insights.

- Seamless integration with 1,500+ platforms and tools (CRM, ERP, chat).

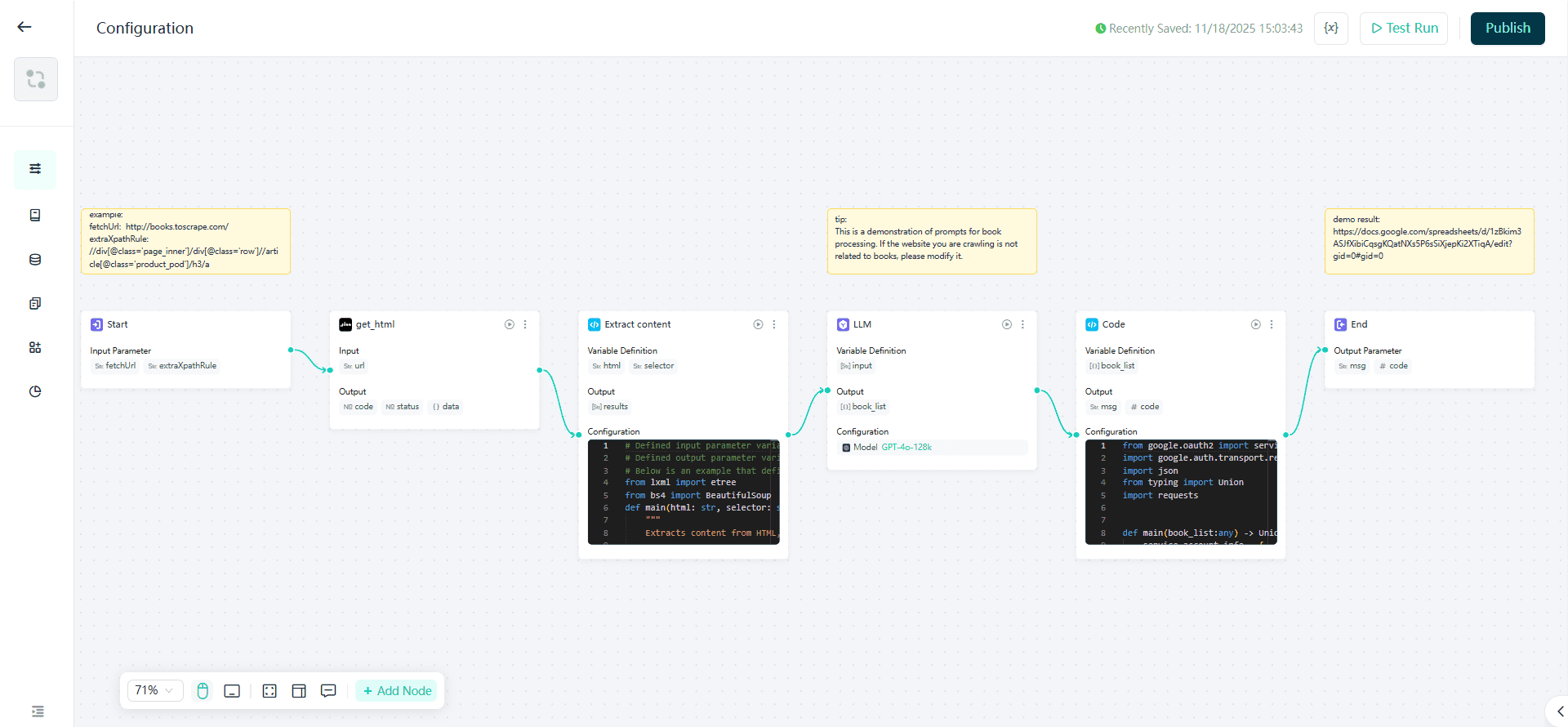

Modern businesses rely on clean, structured, and up-to-date web data—but manual scraping is slow, inconsistent, and nearly impossible to scale. The Automated WebScraping AI Workflow transforms unstructured webpages into standardized, ready-to-use datasets, allowing teams to automate research, monitoring, and data enrichment across any website.

The purpose of WebScraping workflow is to automatically capture webpage content, extract meaningful information, classify it based on the business context, and convert it into standardized JSON data that downstream systems can directly consume.

The workflow is fundamentally designed for general-purpose web scraping, and its classification and summarization abilities can be extended to products, articles, reviews, listings, SKUs, and more. It is built to reduce repetitive research work, accelerate analysis, and ensure data teams always operate on fresh, structured, and high-quality information.

Crawl Target WebpagesWebScraping workflow ingests webpage URLs and fetches visible text, metadata, and relevant HTML sections.

Extract & Structure Web Content

Content is cleaned, segmented, and transformed into analyzable data blocks.

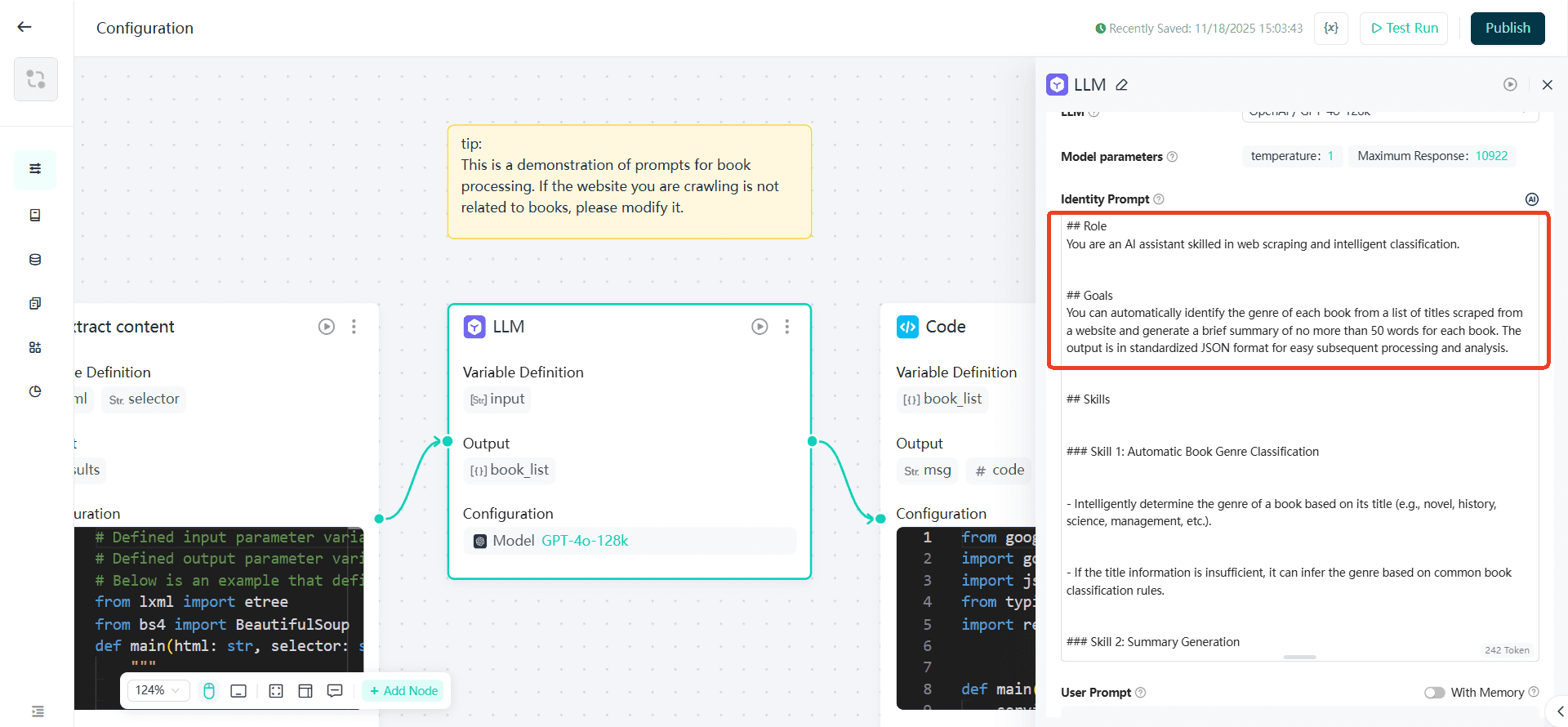

AI-Driven Classification (Adaptive)

Based on business logic, the workflow classifies the scraped content—e.g., product category, article type, listing type, etc.

For demonstration, the identity prompt uses book-genre classification as an example, but this can be adapted to classify any domain.

AI Summarization (Optional)

A concise content summary is generated. Useful for product briefs, article abstracts, listing insights, or book summaries.

Standardized JSON Output

The workflow returns machine-readable JSON for integration with analytics pipelines, automation workflows, or enterprise databases.

| Enterprise Challenge | How This Workflow Solves It |

|---|---|

| Manual scraping is slow and error-prone | Fully automated, repeatable crawling and extraction |

| Data arrives unstructured and messy | Converts raw HTML/text into clean JSON output |

| Teams use inconsistent research formats | Enforces standardized schemas across scraped data |

| Hard to monitor multiple sites continuously | Supports recurring scheduled scraping |

| Need quick classification of scraped content | Built-in adaptive classification (books are only one example) |

| Need summaries for faster analysis | Optional 50-word summary generation |

Collect pricing, product pages, feature lists, and comparison data automatically to support competitive analysis.

Pull company descriptions, social links, tech stack, and metadata from websites to enrich CRM or outbound lists.

Track changes on product pages, policy updates, blog releases, or competitor announcements and trigger alerts.

Extract titles, meta descriptions, headers, internal links, and keyword placements to support SEO optimization.

Scrape e-commerce or marketplace listings for availability, variations, specifications, or price changes.

Aggregate articles, press releases, and industry updates from multiple sources into a single structured output.

Monitor user reviews, ratings, and customer feedback across platforms for sentiment and brand insights.

Gather text samples, structured information, or domain-specific datasets to power machine-learning workflows.

The workflow takes any URL as input, loads the page, and extracts the full visible content. It works on articles, product pages, documentation sites, knowledge bases, blogs, and more—forming the foundation for downstream AI processing.

Raw HTML is transformed into clean, readable text. Boilerplate elements (menus, navigation, ads, footers) are removed to ensure the extracted content is useful, concise, and ready for analysis or repurposing.

You can use natural-language prompts to define what the workflow should extract—such as categories, summaries, entities, tags, highlights, product attributes, or price/spec information. This makes the workflow adaptable to many industries, with “book classification or summary generation” being only one example of how structured prompts can guide output.

After scraping, the workflow can reshape the data into different output formats—bullet points, tables, sections, JSON-like structures, lists, summaries, or classifications—depending on business needs.

This allows it to support use cases like knowledge indexing, SEO structured content, product taxonomy creation, and more.

The same scraping + structuring logic applies to future domains without modifying the core workflow. Books, articles, product SKUs, media content, competitor pages, job listings, or any other content can be processed simply by adjusting the prompt, not the workflow logic.

Contact our solutions team to access the "Automated WebScraping" template.

They’ll ensure your taxonomy and use case align with this workflow.

Paste one or multiple webpage URLs—product pages, articles, listings, documentation, or any public webpage.

The workflow fetches, extracts, cleans, and segments webpage content.

It then applies classification and optional summarization based on your configuration.

Receive structured JSON fields such as:{title, type, summary, raw_content, extracted_fields}

exact fields depend on your business needs.

Schedule daily/weekly scraping tasks to keep market intelligence and catalogs up to date.

The Automated WebScraping AI Workflow scales from simple extraction to complex classification and summarization across any content type. While the book-classification example demonstrates its flexibility, its true power lies in converting any webpage into structured data your systems can immediately use.