Most B2B decision-making now relies more on data. 96% of respondents from the S&P Global Market Intelligence Study emphasize the cruciality of data utilization in their decision-making processes, yet traditional web scraping methods are failing against modern web technologies (web scraping AI agents).

The conventional web scraping approaches built on custom scripts and fixed selectors are becoming ineffective against today's JavaScript-heavy websites and dynamic content. In fact, many traditional tools break with every website layout change and require constant maintenance.

In contrast, AI web scraping solutions use computer vision, natural language processing, and machine learning to understand web content, automate extraction, and adapt to site changes. That's the reason that the AI web scraping market is projected to grow from $886.03 million in 2025 to $4,369.4 million by 2035, at a 17.3% CAGR.

Considering the dominance of web scraping AI agents, we have designed this guide to clarify the working principle behind AI web scraping and then list out the best tools to consider in 2026. So, let's get started!

What is AI Web Scraping and Why Does It Matter?

AI web scraping refers to the use of artificial intelligence (AI) to interpret and extract data from websites. It shifts the fundamental concept of pattern-based extraction to understanding-based extraction.

AI-driven web scraping uses machine learning models and natural language processing to comprehend webpage content the way humans do. These systems can identify what you are looking for based on context and meaning rather than fixed positional relationships in the HTML structure.

The contextual understanding enables AI scraping tools to navigate complex websites, handle JavaScript-heavy pages, bypass CAPTCHA challenges, and extract data from non-standard formats without requiring constant manual intervention.

Traditional Web Scraping vs. AI Web Scraping

Traditional web scraping relies on manually written rules and static selectors to extract data from a website's HTML structure. On the other hand, AI web scraping tools use natural language processing (NLP) and computer vision to understand content from almost any website.

The fundamental difference lies in their approach. Traditional methods are brittle and break with website layout changes, as well as requiring constant manual maintenance. Whereas AI systems automatically interpret visual and semantic elements, much like humans do.

| Aspect | Traditional Web Scraping | AI Web Scraping |

|---|---|---|

| Core Methodology | Relies on static rules, XPaths, and CSS selectors. | Uses ML & NLP agents for contextual understanding. |

| Adaptability | Breaks easily with site changes. | Adapts automatically to layout and content changes. |

| Handling Dynamic Content | Struggles with JavaScript-heavy sites without browsers. | Excellent at interpreting dynamically loaded content. |

| Maintenance Overhead | High | Low |

| Data Understanding | Extracts based on position in code. | Extracts based on meaning and context. |

| Scalability | Difficult to scale | Easily scalable |

Key Benefits of Web Scraping AI Agents

The flourishing AI-driven web scraping market is proof that this emerging concept is making scraping activities effortless and fast. So, let's now take a look at the operational advantages of web scraping with AI:

- Adaptive Parsing: AI web scraping agents can automatically adjust to website structure changes without manual reconfiguration. There is no need for manual reconfigurations. The intelligent adaptability ensures accurate data extraction for different page layouts and formats.

- Anti-Bot Detection Bypass: Modern AI scraping solutions incorporate sophisticated techniques to avoid detection and blocking. They follow human browsing behavior using machine learning, which lets them bypass sophisticated anti-bot systems.

- Intelligent Data Extraction: AI-driven scrapers go beyond basic HTML parsing. They can understand page context and extract meaningful insights. They demonstrate high accuracy in identifying entities, such as product names, prices, reviews, etc.

To sum up, web scraping AI agents are becoming the new favorite of financial, e-commerce, market research, and other firms for real-time insights, trend analysis, competitive monitoring, and automated reporting.

Real-World B2B Use Cases and ROI Impact

Hundreds of organizations are now using web scraping AI agents for faster data extraction with minimal effort. Here are three real-world B2B use cases of AI-driven web scraping and their ROI impacts:

Case Study 1: B2B Lead Scoring

An enterprise software vendor deployed web-monitoring of 5,000 target company sites for buying signals. After nine months, the lead conversion rate rose from 8% to 12.4% (approx +55 % improvement). Besides that, the ~US$85K implementation cost yielded over US$2.4M benefit.

Case Study 2: 312% ROI for E-Commerce Platform in 1 Year

A global e-commerce platform replaced a team of 15 scrapers with an AI-driven system. The first-year cost dropped from US$4.1M to US$270K, and the ROI reached ~312% due to faster competitor onboarding and an increase in data accuracy from 71% to 96%.

Case Study 3: E-Commerce Demand Forecasting

A multi-category online retailer scraped competitor pricing, availability, promotions, and review-sentiment data daily using an AI-driven extraction platform. This improved demand-forecasting accuracy by 23% (from 65% to 50% MAPE), cut stock-outs by 35% saving ~$1.1M/year, and freed ~$900K in working capital.

Top AI Web Scraping Tools for 2026

The AI-driven web scraping market is in full swing. There is now an extensive range of AI web tools helping businesses automate data extraction with high efficiency. However, there isn't a one-size-fits-all solution. The choice depends on your budget and use case.

So, let's first look at the best AI web scraping tools 2026 and then also learn the tips to implement AI web scraping with Python.

What Are the Best AI Web Scraping Tools for 2026?

| Tool | Type | Features | Use Cases | Pricing |

|---|---|---|---|---|

|

GPTBots |

No-code / enterprise AI-agent platform |

|

|

Custom pricing (Contact sales) / Free trial available |

|

Apify |

Cloud-based AI web scraping platform |

|

|

Free / Starter $39 / Scale $199 / Business $999 per month |

|

Scrapy |

Open-source Python framework |

|

|

Free (open source) |

|

Octoparse |

No-code AI web scraping solution |

|

|

Free (10 tasks) / Standard $83 / Professional $299 / Enterprise Custom |

|

Bright Data |

Enterprise data collection & proxy platform |

|

|

Pay-as-you-go $1.50/1K results / Subscription ~$499+ monthly |

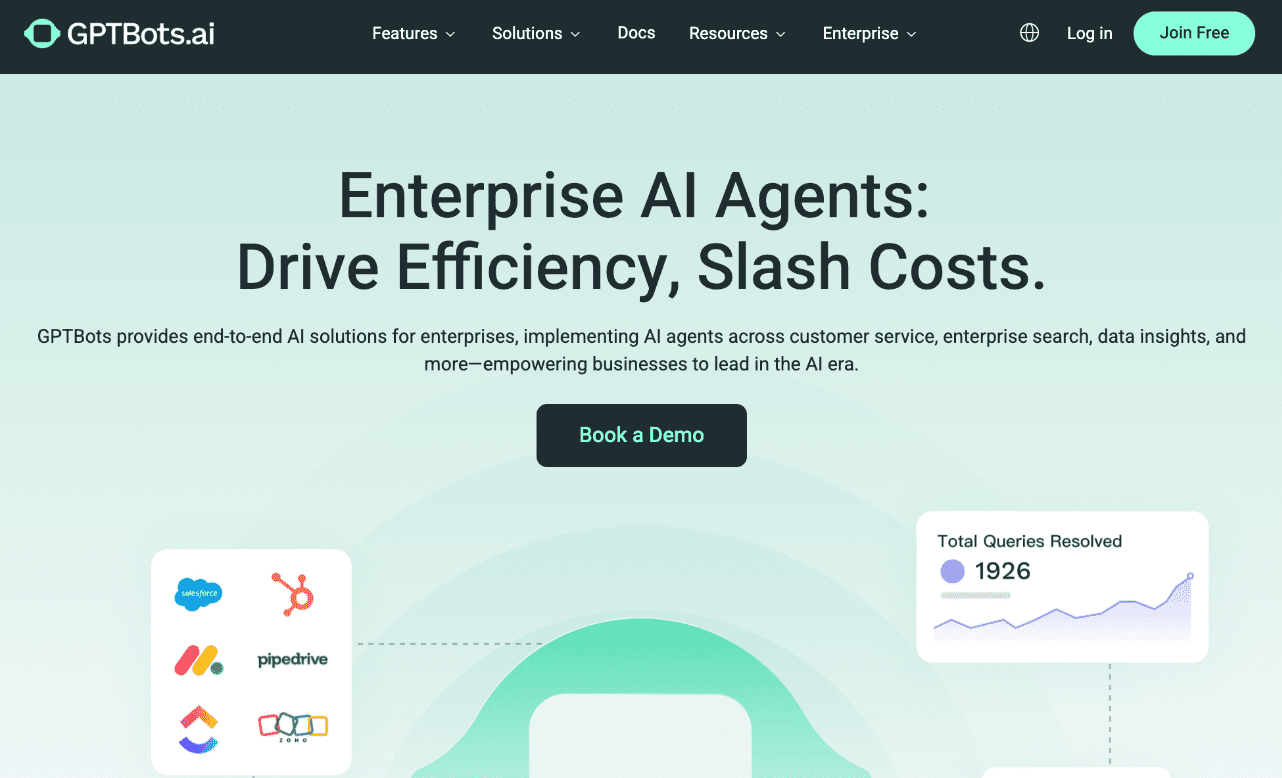

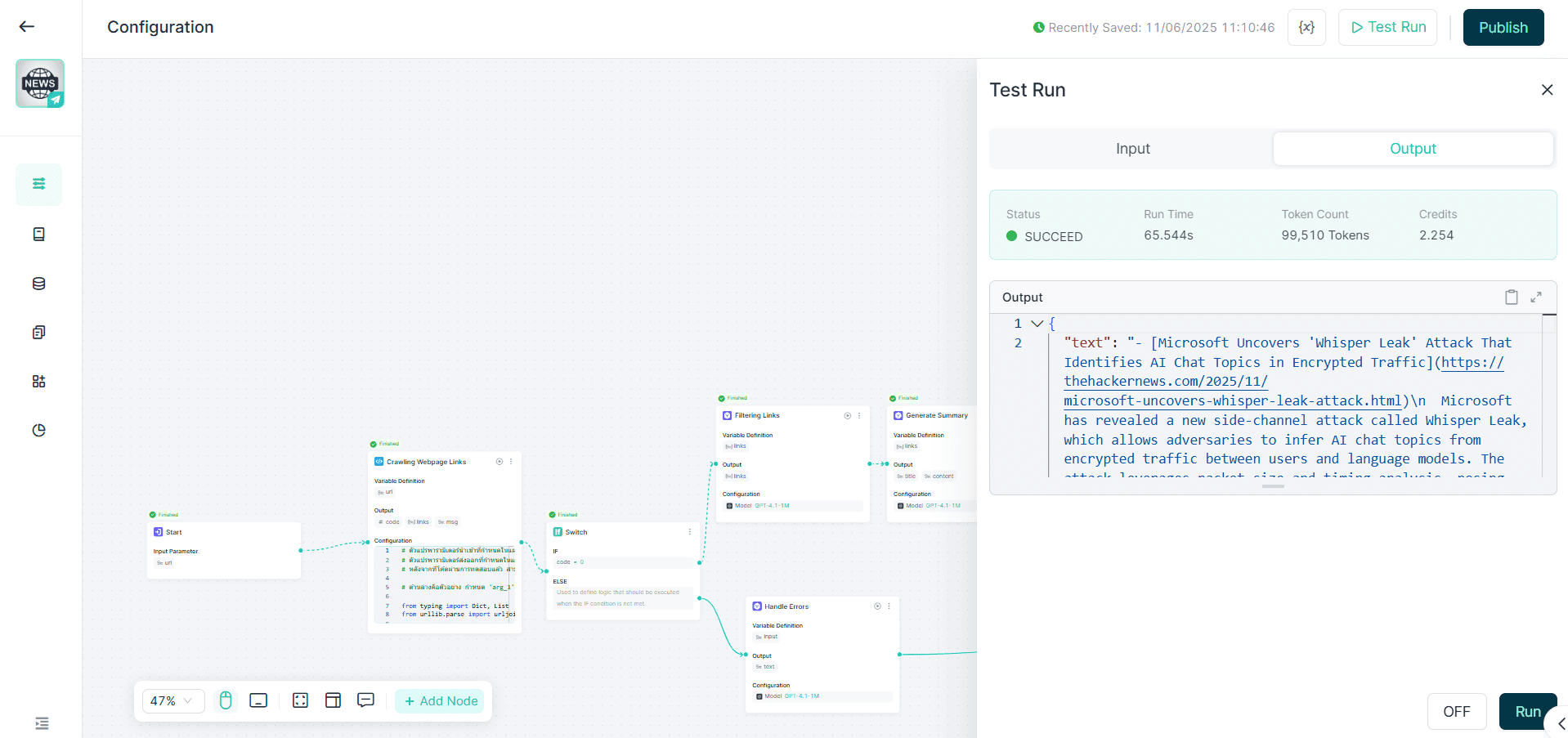

GPTBots

GPTBots is an AI platform to build and deploy enterprise-grade web scraping AI agents in minutes. It offers a no-code visual builder to design customized scraping agents to perform specific tasks. Plus, it allows you to select specific LLMs for use in scraping activities.

Key Features

- Visual, no-code builder to develop customized AI web scraping agents.

- Choose from multiple large language models (GPT-4, Claude, Gemini, etc.) to power data interpretation and adaptive parsing.

- Converts raw web data into structured, labeled formats, such as JSON, CSV, or API-ready datasets.

- Create end-to-end scraping pipelines that automatically extract and route data to analytics dashboards or databases.

- Intelligent throttling, human-like navigation, and CAPTCHA handling to reduce scraping disruptions.

- Flexible private deployment options for maximum data security and compliance.

- API/webhook integrations for automation and enterprise deployment (input → agent → structured JSON output).

Use Cases

- Automatically scrape websites and summarize them with AI.

- Verify the legitimacy of the website to determine if it is trustworthy or a scam.

- Generate news summaries by simply inputting the news webpage URL.

- Extract the company's detailed info from a URL.

- Analyze the landing page with GPT and get optimization tips.

Pricing

GPTBots offers customized pricing for each organization. You can contact sales to ask for a demo or start with a free trial.

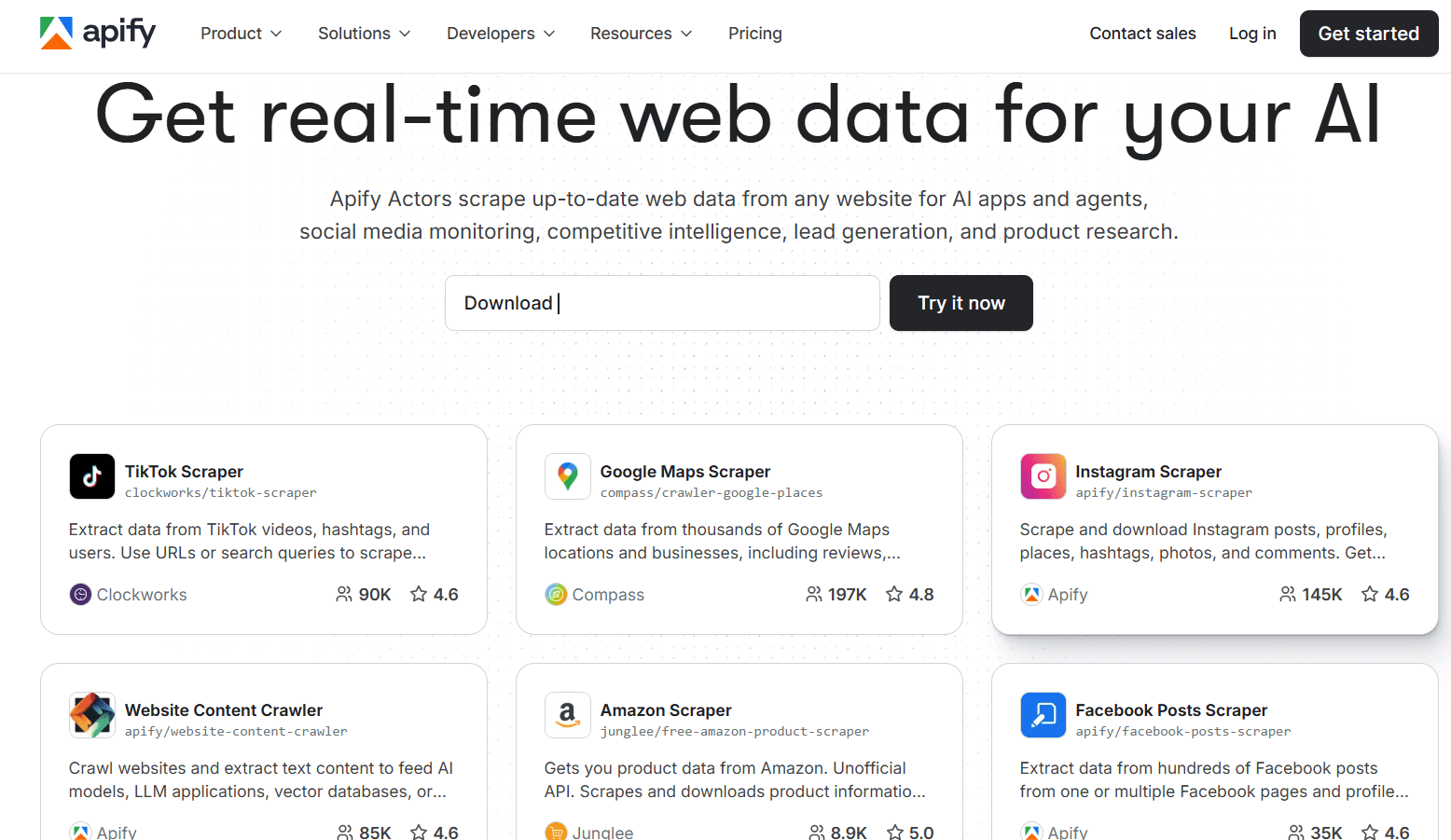

Get a Custom DemoApify

Apify web scraping AI agents (called Actors) can extract real-time web data from websites, apps, and more. It offers over 6,000 actors for scraping website content, including TikTok, Instagram, Google Maps, Facebook posts, and more. It also lets users build new Actors with code templates and guides.

Key Features

- 6000+ pre-built Actors for popular websites.

- Easy development of new customized Actors.

- Smooth integration with other apps/platforms.

- Natural language instructions to browse the web and extract data.

Use Cases

- Scheduled scraping of product listings and review sentiment across 100+ e-commerce sites with built-in actors.

- Using AI automation to navigate login-protected pages, fill forms, and extract dynamic content without manual selector coding.

- Automate lead-generation workflows, i.e., monitor forums/job boards for signals, extract company profiles, and output into CRM.

Pricing

- Free: $0/month + pay-as-you-go ($0.3 per compute unit)

- Starter: $39/month + pay-as-you-go ($0.3 per compute unit)

- Scale: $199/month + pay-as-you-go ($0.25 per compute unit)

- Business: $999/month + pay-as-you-go ($0.2 per compute unit)

Scrapy

Scrapy is a Python open-source web crawling and scraping framework. It is used for building custom and scalable spiders to extract public web data. However, we can now integrate LLM with Scrapy to make it operate as an AI web scraping agent that can extract data from the web using the predefined scheme in our preferred language model.

Key Features

- Free, open-source, and Python-native.

- Build and scale spiders for any web extraction task.

- Asynchronous request processing, built-in crawling architecture, selectors support, and export to JSON/CSV.

- Middleware support, including proxy integration, JavaScript rendering (via Splash or Playwright), and integration with higher-level AI modules.

Use Cases

- Developer teams building large-scale extraction systems (100 Ks+ pages) with custom logic and data flows.

- Build bespoke pipelines for niche industries with customized logic and storage, such as monitoring B2B vendor directories, job boards, or regulatory filings.

Pricing

- Free to use (open source)

Octoparse

Octoparse is a no-code and AI web scraping solution to extract structured data from web pages with simple clicks. Its workflow designer makes it simple and quick to guide customized web scrapers. You also get to schedule scrapers to extract data just in time.

Key Features

- No-code workflow designer.

- Overtakes web scraping challenges with IP rotation, CAPTCHA solving, infinite scrolling, proxies, etc.

- Preset templates for popular websites.

- AI-assisted auto-detect for fields and workflow suggestions.

Use Cases

- Marketing or operations teams that need to set up data collection tasks quickly without engineering support.

- Extract competitor product pricing daily via template + schedule.

- Facilitate data refresh workflows by monitoring site changes, updating dashboards, and exporting data to Excel/Google Sheets/db with minimal coding.

Pricing

- Free: $0 (10 tasks)

- Standard Plan: $83/month (100 tasks)

- Professional Plan: $299/month (250 tasks)

- Enterprise Plan: Custom (750+ tasks)

Bright Data

Last on our list of the best AI web scraping tools 2026 is Bright Data. It is a large-scale data acquisition platform that provides crawling infrastructure, proxy networks, and scraping APIs. Its AI offering emphasizes the ability to feed web data into AI training pipelines and handle high-volume and global scraping tasks with automation.

Key Features

- Massive proxy network + Web Scraper APIs + SERP APIs covering 195+ countries and full geo-localization.

- Built-in anti-blocking, IP rotation, JavaScript rendering, and enterprise-scale support for complex scraping tasks.

- Auto-scaling, fully hosted cloud infrastructure that supports unlimited concurrent sessions.

Use Cases

- Enterprise firms conducting global market research, i.e., scraping and monitoring thousands of websites across geographies with compliance and proxy coverage.

- Feeding scraped web data into AI/ML pipelines for training models, such as product catalogs, consumer reviews, and news data.

- High-volume price intelligence or SERP monitoring, where scale, reliability, and global coverage are crucial.

Pricing

- Pay-as-you-go: $1.50 per 1,000 results

- Subscription plans start at ~$499/month for Web Scraper IDE or other modules.

How to Implement AI Web Scraping with Python?

Python remains a workhorse for data extraction. You can use standard libraries and mix in AI web scraping with Python as needed.

Essential Python Libraries for AI Web Scraping Python

- Requests + BeautifulSoup / lxml — lightweight stack for static pages and quick HTML parsing. Great for simple and reliable scrapes.

- Scrapy — scalable and battle-tested crawling framework for large jobs, with middleware hooks to insert AI/LLM logic.

- Playwright / Selenium — drive headless browsers for JavaScript-heavy sites and interactive flows (logins, infinite scroll). Playwright is preferred more for reliability and concurrency.

- LLM integration layers — community tools such as scrapy-llm or custom LLM calls let you convert unstructured HTML into structured data using prompts.

- Data & ML tooling — pandas for transformations, GPTBots, Hugging Face, or OpenAI for entity extraction/normalization, and vector stores (e.g., for search) when you combine scraped content with knowledge-base features.

Minimal Working Examples

1. Static page — Requests + BeautifulSoup

import requests

from bs4 import BeautifulSoup

url = "https://example.com/products"

res = requests.get(url, timeout=10)

res.raise_for_status()

soup = BeautifulSoup(res.text, "html.parser")

# Parsing product info with BeautifulSoup

products = []

for card in soup.select(".product-card"):

products.append({

"title": card.select_one(".title").get_text(strip=True),

"price": card.select_one(".price").get_text(strip=True)

})

print(products)

Use this for pages where HTML contains the full content. Add headers, sessions, and short randomized delays for politeness.

2. Dynamic content — Playwright (Python)

from playwright.sync_api

import sync_playwright

# Parsing product info with Playwright

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

page.goto("https://example.com/products")

page.wait_for_selector(".product-card")

cards = page.query_selector_all(".product-card")

data = [{

"title": c.query_selector(".title").inner_text().strip(),

"price": c.query_selector(".price").inner_text().strip()

} for c in cards]

browser.close()

print(data)

Playwright loads JS-driven content reliably and supports browser contexts and proxy settings.

3. Scrapy + LLM post-processing (pattern)

Scrapy crawls at scale and sends each scraped page text to an LLM to extract structured fields:

# inside your Scrapy pipeline(pseudocode)

def process_item(self, item, spider):

text = item['raw_html_text']

# call LLM(OpenAI) with prompt: "Extract name, price, sku from text"

parsed = call_llm_extract(text)

item.update(parsed)

return item

Community projects like scrapy-llm demonstrate plugging LLMs into Scrapy pipelines for schema-driven extraction.

4. Integration with OpenAI and Other AI Services

When to call an LLM: use LLMs for semantic tasks — entity extraction, deduplication, normalization (e.g., convert "$9.99" → numeric), classification, or to infer missing fields when HTML is noisy. Example of OpenAI call:

import openai

openai.api_key = "YOUR_KEY"

# Parsing product info with OpenAI

resp = openai.ChatCompletion.create(

model="gpt-4o",

messages=[{

"role":"user",

"content": "Extract product_name;price;sku from the text:\n\n" + scraped_text

}])

structured = parse_response(resp)

Always validate model output and convert types before persisting.

How GPTBots API can Enhance Python Scraping Workflows

GPTBots exposes a "Workflow API" you can invoke from Python to run prebuilt AI agents (for example, extractCompanyProfileFromURL) so your Python script doesn't need to reimplement LLM parsing logic or orchestrate multi-step data ingestion.

Typical flow would be: Python downloads page (or passes URL) → calls GPTBots workflow/invoke → GPTBots runs its agent (scrape/parse/structure) and returns JSON.

This reduces housekeeping around schema management, re-embedding, and knowledge-base updates. Let's take a look at the example:

# Parsing product info with GPTBots workflow API

curl - X POST "https://api-{endpoint}.gptbots.ai/v1/workflow/invoke"\

- H "Authorization: Bearer YOUR_KEY"\

- H "Content-Type: application/json"\

- d '{"workflow_id":"extractCompanyProfileFromURL","input":{"url":"https://target.com"}}'

Then poll "query_workflow_result" to fetch structured JSON output.

Best Error Handling and Optimization Techniques

- Retries & Backoff: Implement exponential backoff with capped retries for transient HTTP errors. Use libraries or adapters, such as requests.adapters.HTTPAdapter.

- Timeouts & Sessions: Set sensible timeouts and reuse requests.Session() or browser contexts to reduce DNS and TCP overhead.

- Proxies & IP Rotation: Utilize rotating residential/cloud proxies for enhanced geo-coverage and to minimize blocks. Pair with user-agent rotation and human-like delays. Bright Data and Oxylabs are standard providers for enterprise needs.

- Throttling & Politeness: Honor rate limits, robots.txt where applicable, and add jitter to requests to reduce detection signals.

- Monitoring & Observability: Export metrics (success rate, latency, error types). Use dashboards and alerting to spot regressions after site changes.

- Validation & Cleaning: Apply schema validation (Pydantic or custom validators) on LLM outputs to catch hallucinations and normalize types before storage.

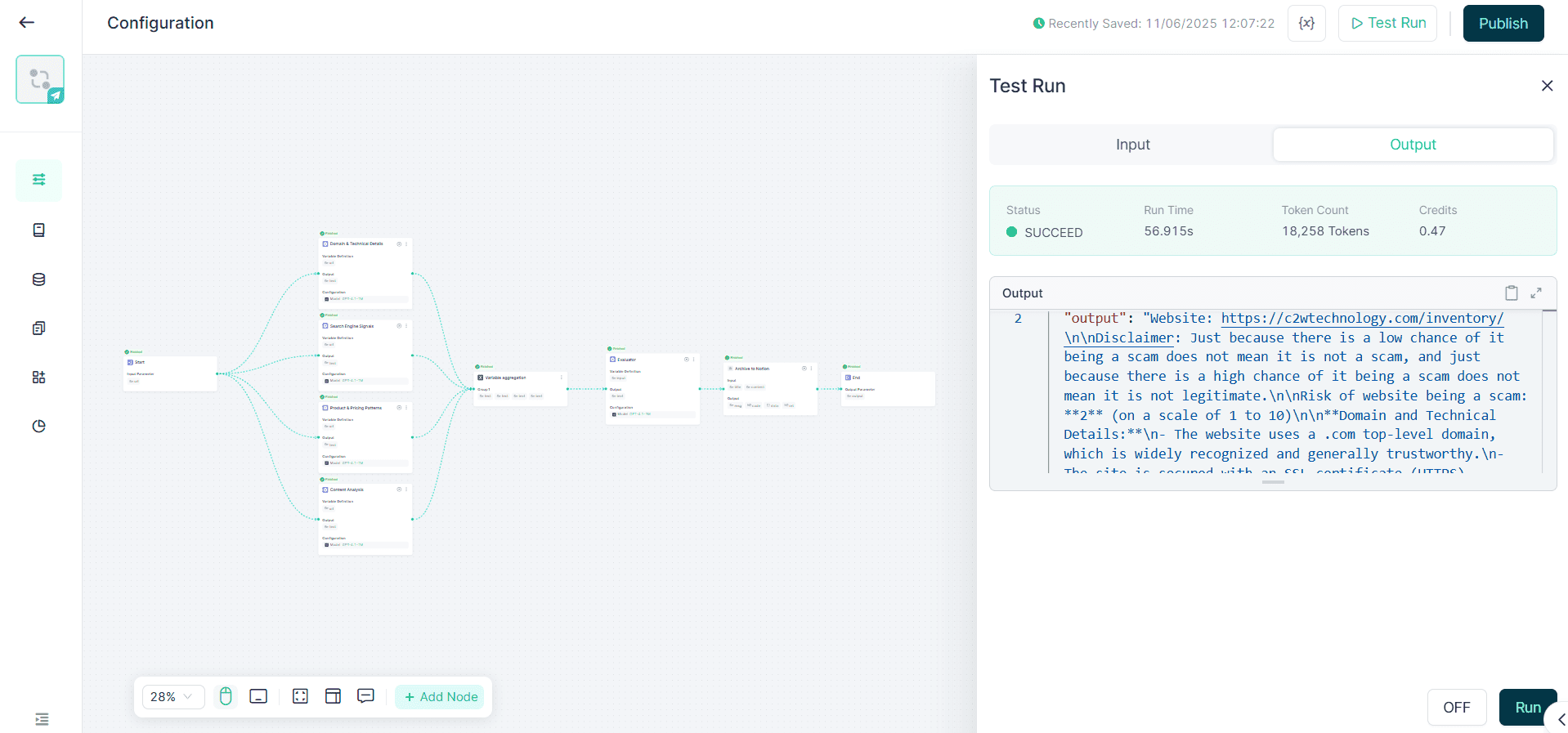

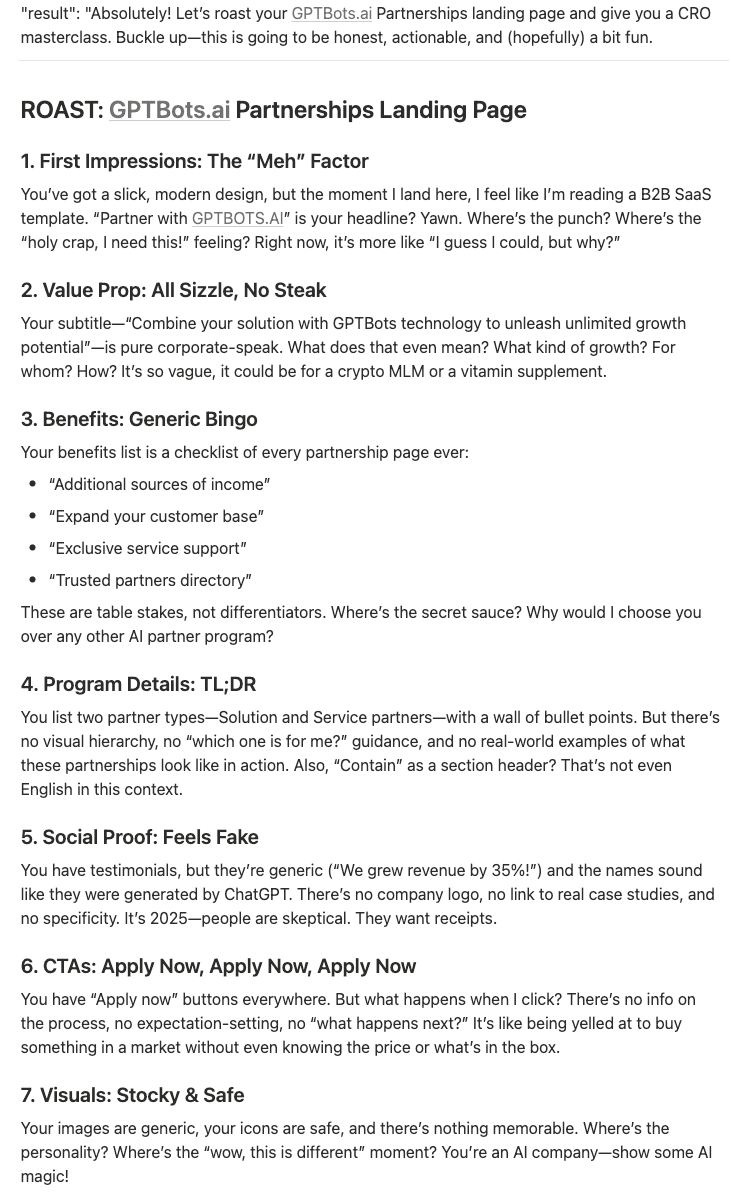

Bonus: AI Landing Page Analysis with GPTBots

GPTBots offers complete control to build and deploy customized web scraping AI agents for different scraping activities. One of the useful applications is AI landing page analysis.

Here, GPTBots agents automatically extract and interpret on-page elements, such as CTAs, headlines, keywords, and metadata. Based on that, it provides:

- Detailed feedback that pinpoints conversion barriers.

- Advanced CRO recommendations.

- Advice on specific page challenges

Let's now look at how to build and use an AI landing page analysis agent with GPTBots:

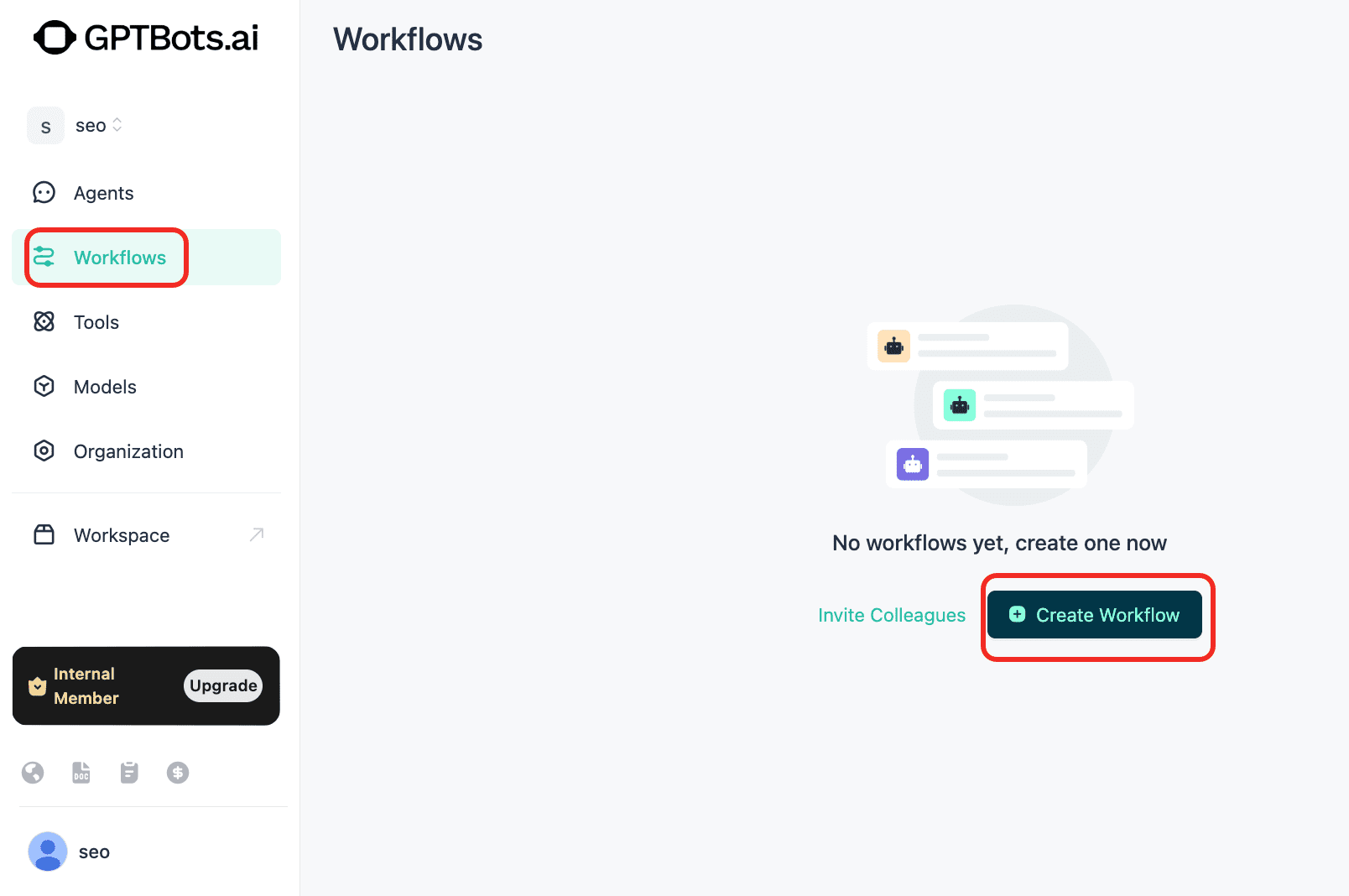

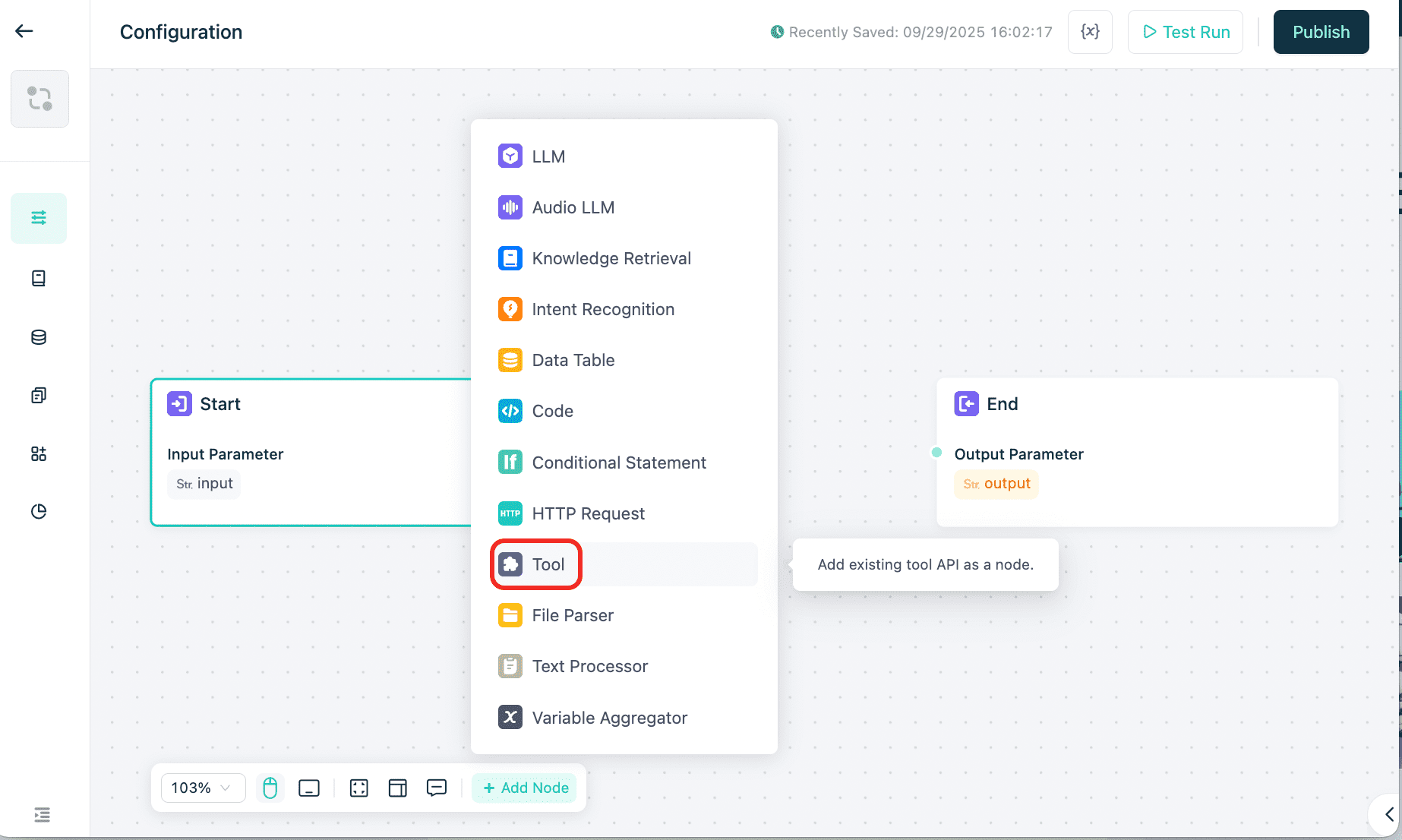

Step 1. Create a workflow.

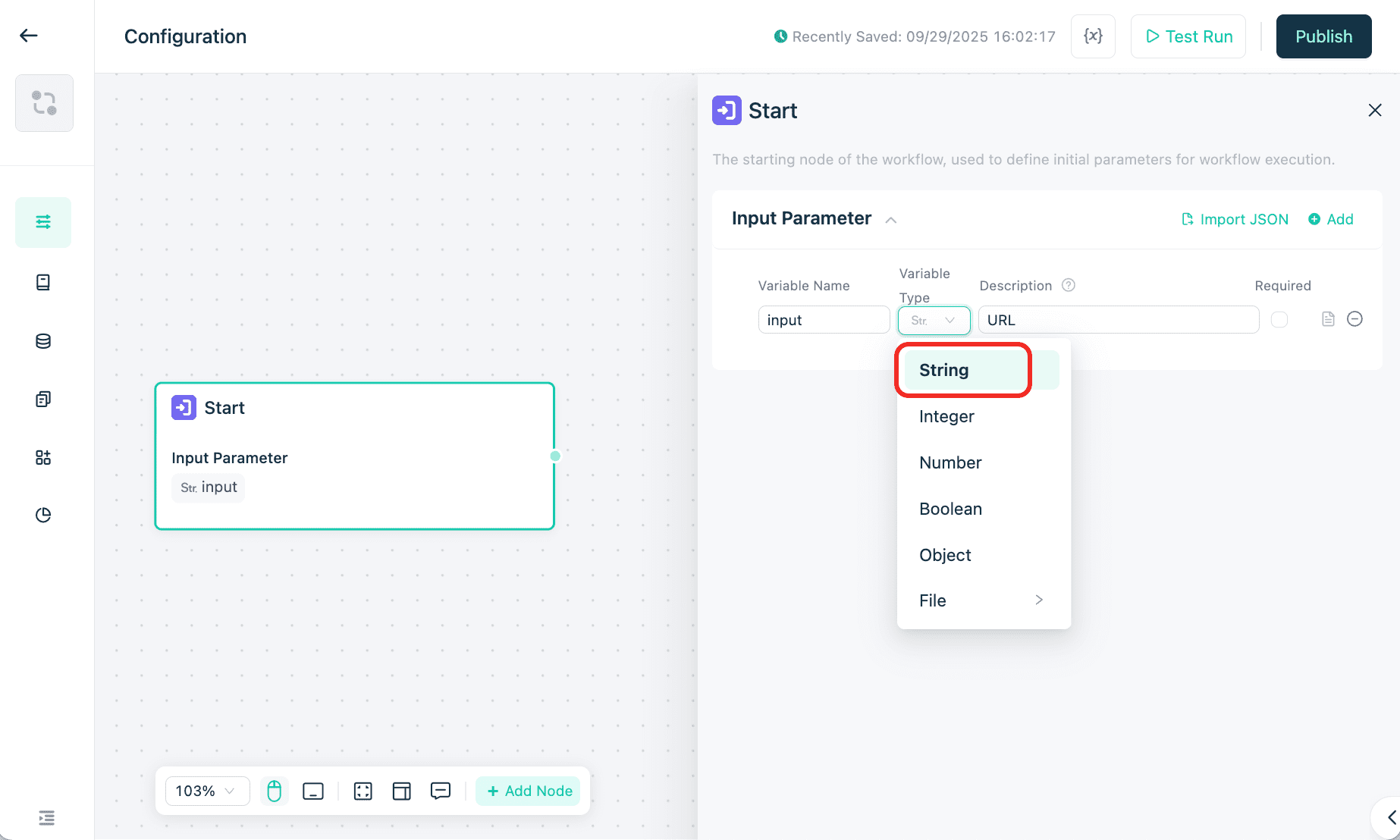

Step 2. Initialize only the Start and End components. First, define the input for the Start component. Define it as a String-type parameter named URL.

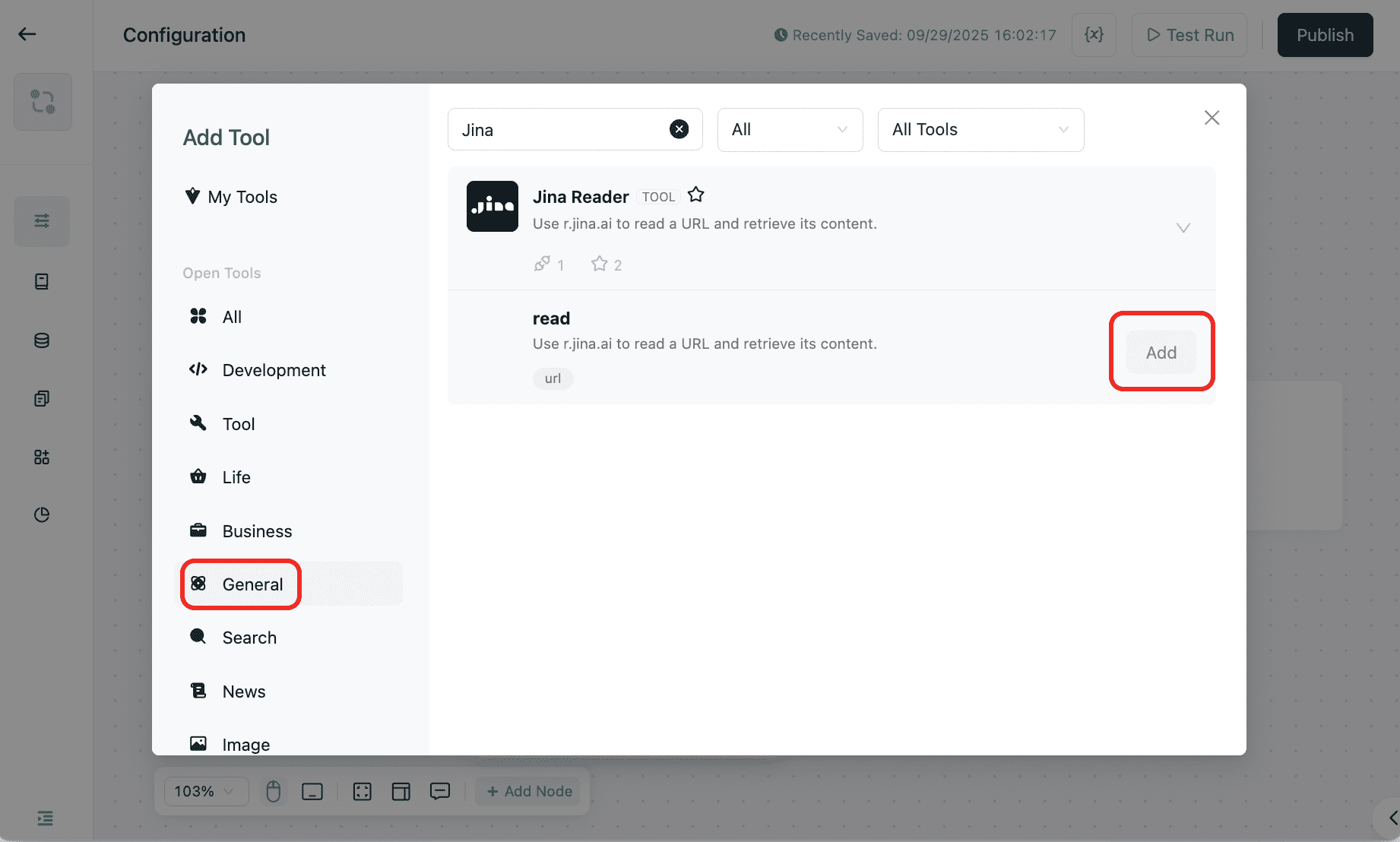

Step 3. Import the Jina HTML Reader from Tools. Its function is to parse URLs and retrieve the HTML source code of web pages. This process requires no coding.

Connect to the Start component and pass the URL parameters from Start/input to the Jina Reader component.

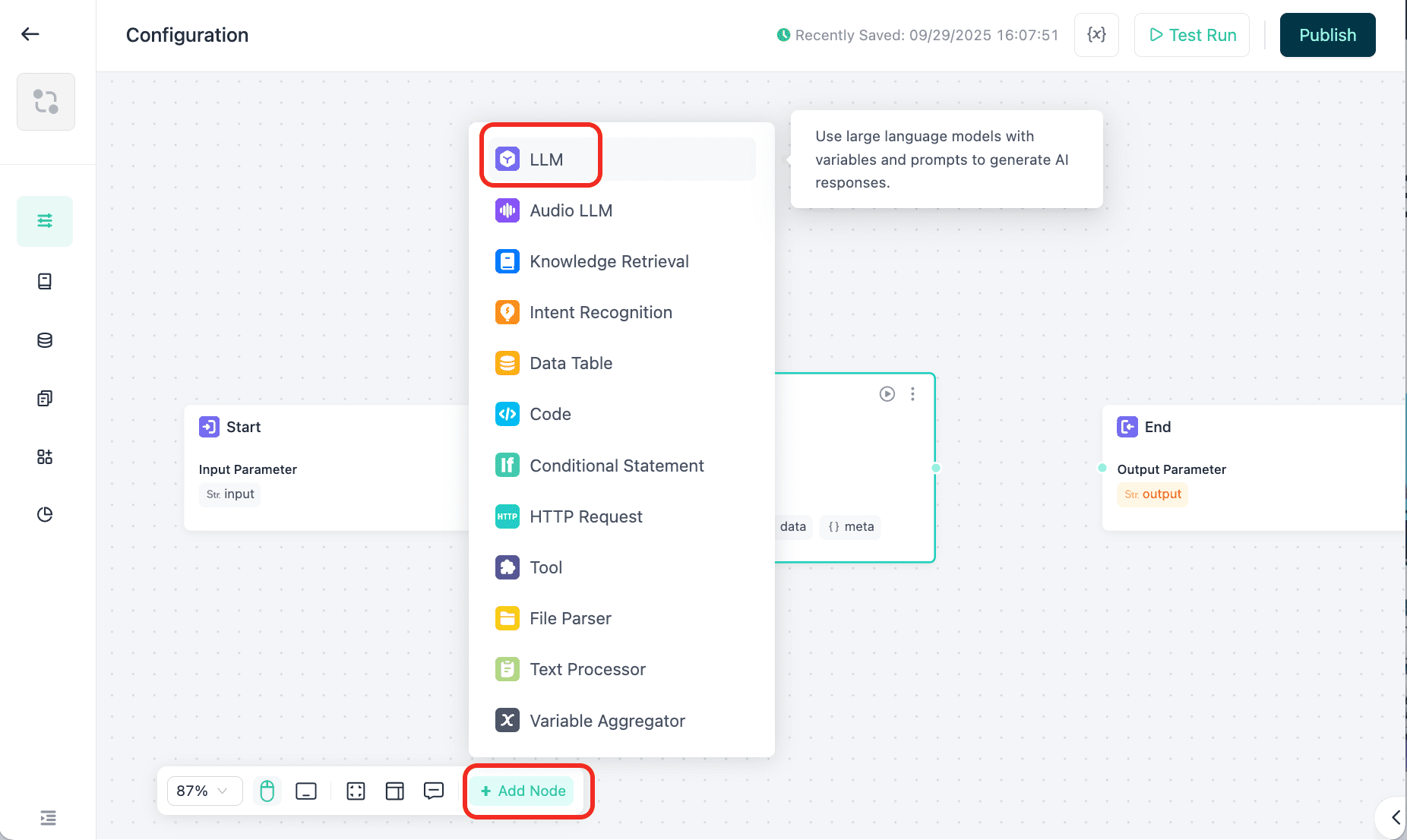

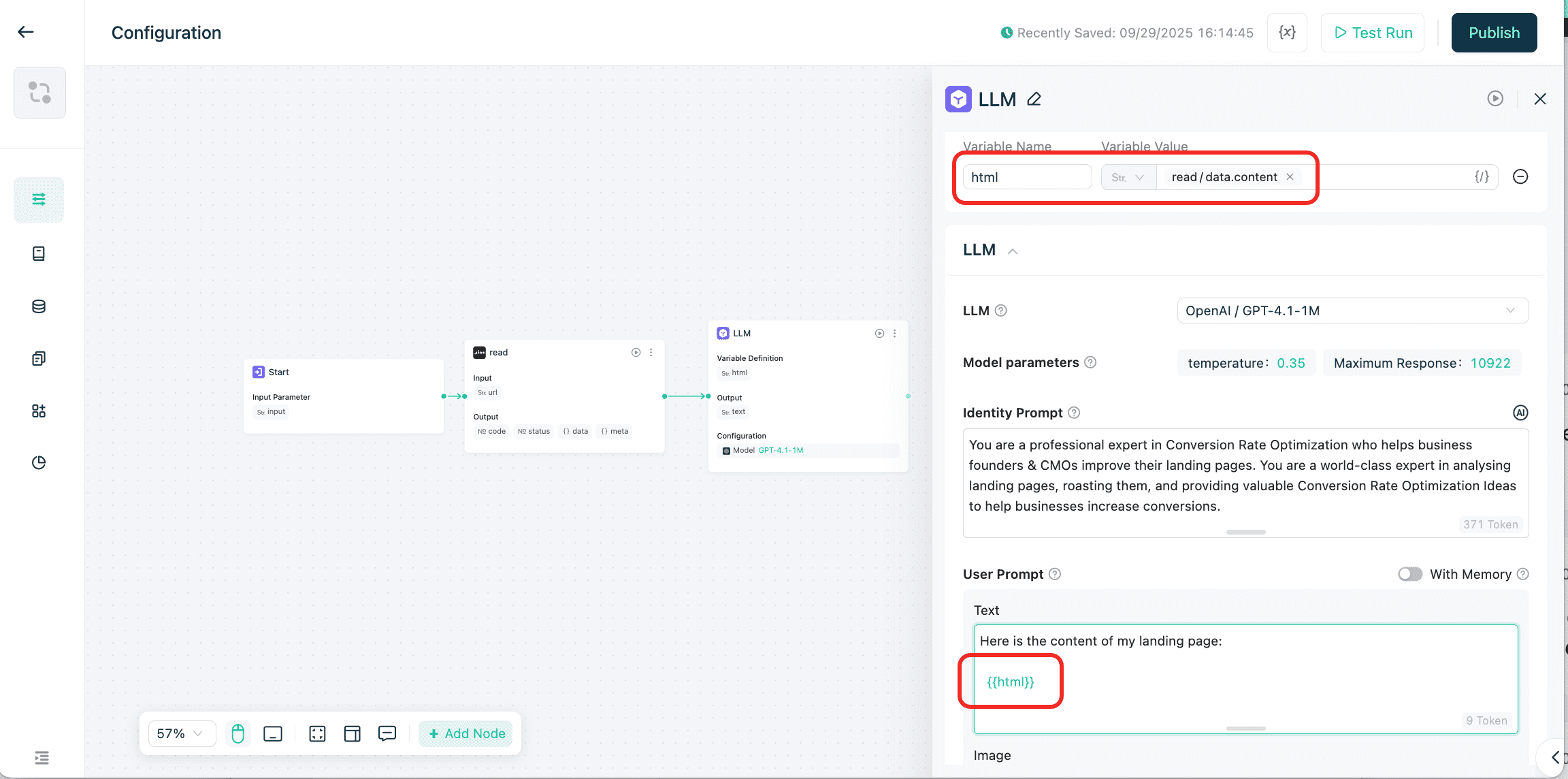

Step 4. Add the LLM component for AI analysis.

Connect Jina Reader to an LLM and pass web page content to the LLM for analysis.

Set prompt.

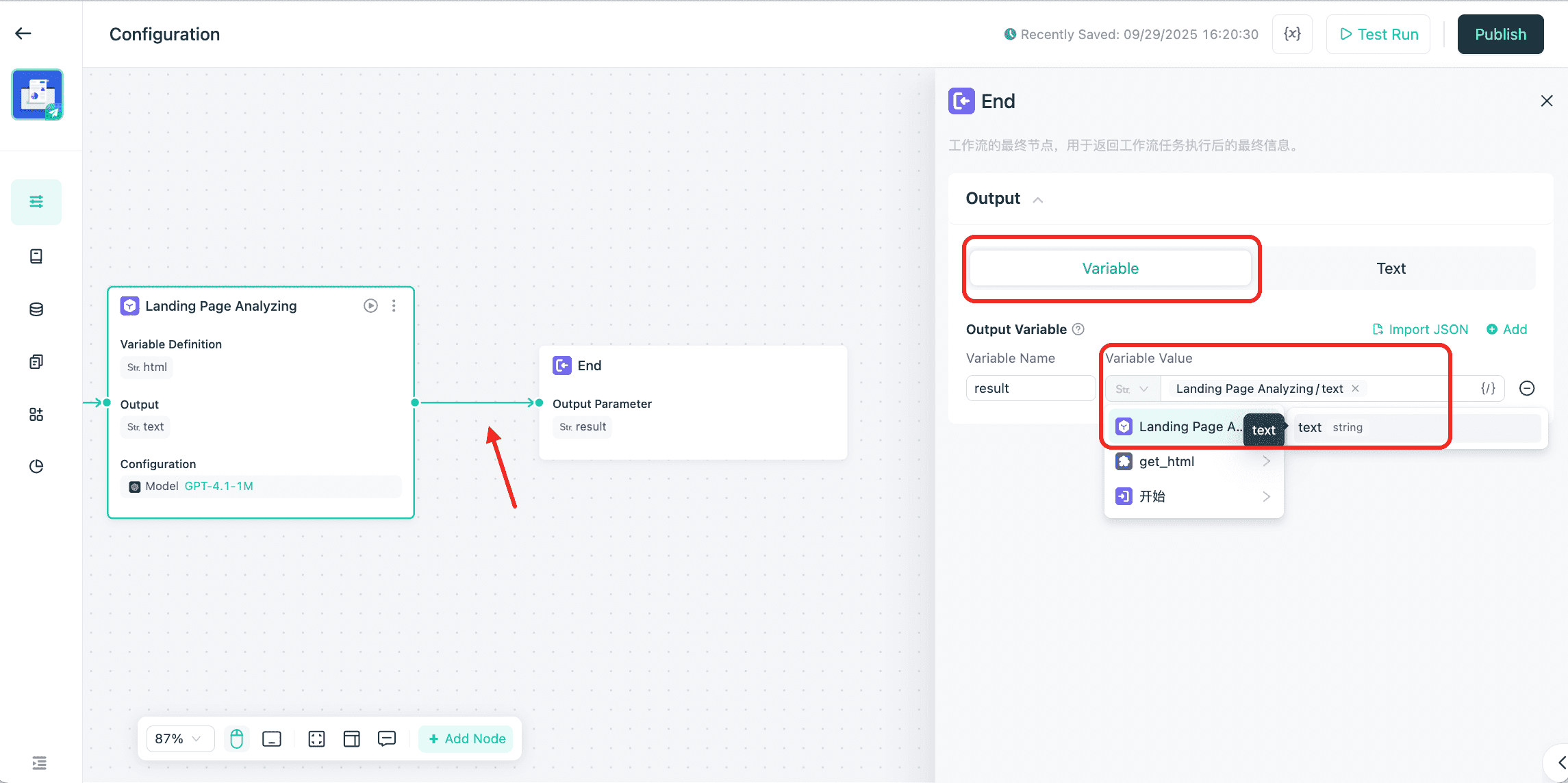

Step 5. Connect the End component and use the LLM's output as the result.

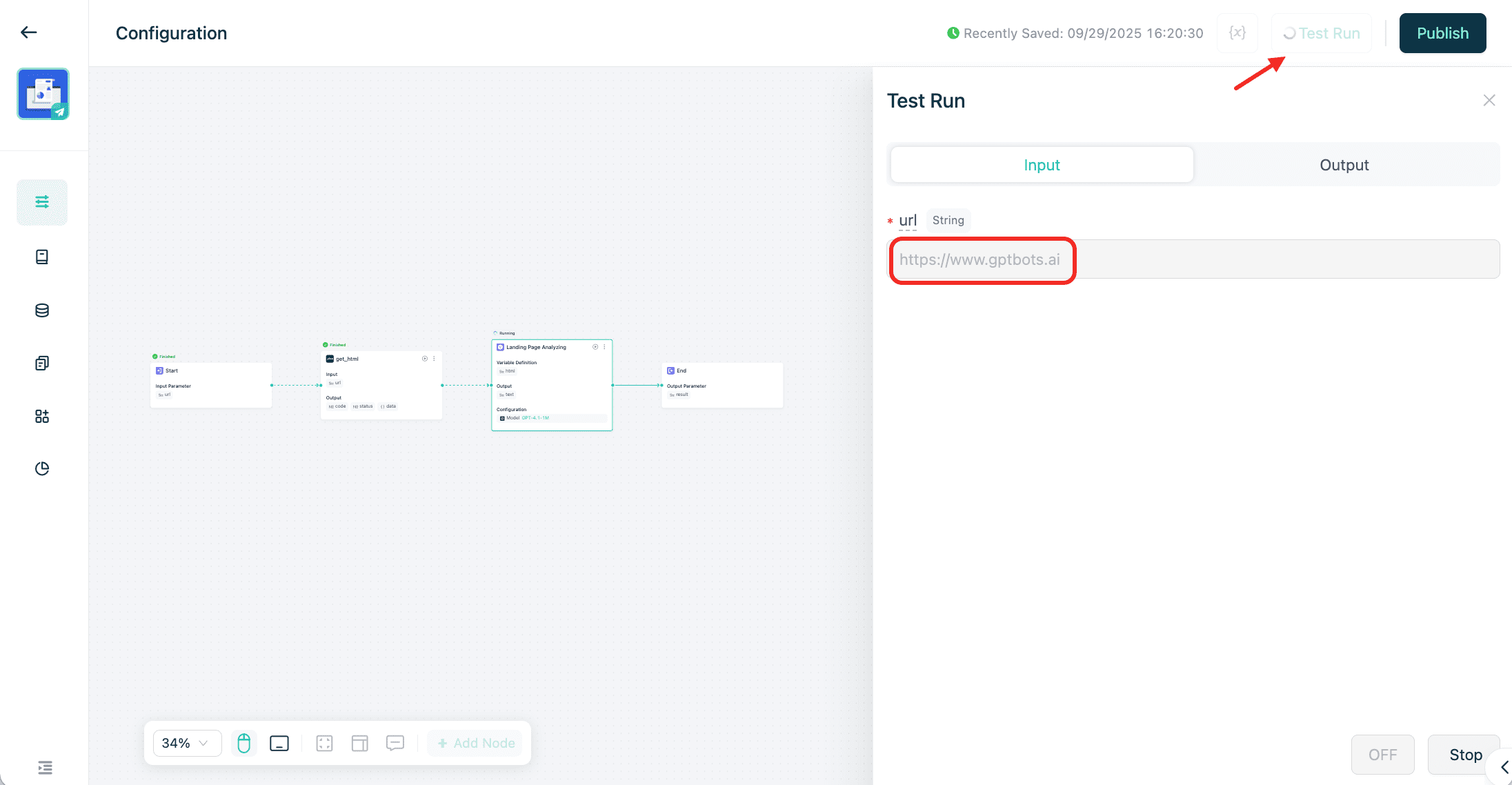

Step 6. Click Test Run, enter the test URL, and execute.

That's how quickly you can build an AI web scraping agent with GPTBots.

Conclusion

AI web scraping is a game-changer for businesses to extract and operationalize web data in 2026. Gone are the days when organizations had to struggle using traditional scrapers, especially for dynamic and JavaScript-heavy sites.

AI web scraping tools like GPTBots, Apify, Octoparse, and Bright Data are enabling B2B organizations to automate large-scale and context-aware data collection with precision. These platforms combine machine learning, NLP, and adaptive parsing to deliver structured, reliable insights without constant maintenance.

Therefore, it's time to start building web scraping AI agents and drive better business decisions. And what's a better way to begin than using the no-code visual builder of GPTBots to create and scale intelligent scraping agents without writing a single line of code?

Get a Custom Demo